Dataset Viewer

prompt

stringlengths 501

4.98M

| target

stringclasses 1

value | chunk_prompt

bool 1

class | kind

stringclasses 2

values | prob

float64 0.2

0.97

⌀ | path

stringlengths 10

394

⌀ | quality_prob

float64 0.4

0.99

⌀ | learning_prob

float64 0.15

1

⌀ | filename

stringlengths 4

221

⌀ |

|---|---|---|---|---|---|---|---|---|

```

#export

from fastai.basics import *

from fastai.tabular.core import *

from fastai.tabular.model import *

from fastai.tabular.data import *

#hide

from nbdev.showdoc import *

#default_exp tabular.learner

```

# Tabular learner

> The function to immediately get a `Learner` ready to train for tabular data

The main function you probably want to use in this module is `tabular_learner`. It will automatically create a `TabulaModel` suitable for your data and infer the irght loss function. See the [tabular tutorial](http://docs.fast.ai/tutorial.tabular) for an example of use in context.

## Main functions

```

#export

@log_args(but_as=Learner.__init__)

class TabularLearner(Learner):

"`Learner` for tabular data"

def predict(self, row):

tst_to = self.dls.valid_ds.new(pd.DataFrame(row).T)

tst_to.process()

tst_to.conts = tst_to.conts.astype(np.float32)

dl = self.dls.valid.new(tst_to)

inp,preds,_,dec_preds = self.get_preds(dl=dl, with_input=True, with_decoded=True)

i = getattr(self.dls, 'n_inp', -1)

b = (*tuplify(inp),*tuplify(dec_preds))

full_dec = self.dls.decode((*tuplify(inp),*tuplify(dec_preds)))

return full_dec,dec_preds[0],preds[0]

show_doc(TabularLearner, title_level=3)

```

It works exactly as a normal `Learner`, the only difference is that it implements a `predict` method specific to work on a row of data.

```

#export

@log_args(to_return=True, but_as=Learner.__init__)

@delegates(Learner.__init__)

def tabular_learner(dls, layers=None, emb_szs=None, config=None, n_out=None, y_range=None, **kwargs):

"Get a `Learner` using `dls`, with `metrics`, including a `TabularModel` created using the remaining params."

if config is None: config = tabular_config()

if layers is None: layers = [200,100]

to = dls.train_ds

emb_szs = get_emb_sz(dls.train_ds, {} if emb_szs is None else emb_szs)

if n_out is None: n_out = get_c(dls)

assert n_out, "`n_out` is not defined, and could not be infered from data, set `dls.c` or pass `n_out`"

if y_range is None and 'y_range' in config: y_range = config.pop('y_range')

model = TabularModel(emb_szs, len(dls.cont_names), n_out, layers, y_range=y_range, **config)

return TabularLearner(dls, model, **kwargs)

```

If your data was built with fastai, you probably won't need to pass anything to `emb_szs` unless you want to change the default of the library (produced by `get_emb_sz`), same for `n_out` which should be automatically inferred. `layers` will default to `[200,100]` and is passed to `TabularModel` along with the `config`.

Use `tabular_config` to create a `config` and cusotmize the model used. There is just easy access to `y_range` because this argument is often used.

All the other arguments are passed to `Learner`.

```

path = untar_data(URLs.ADULT_SAMPLE)

df = pd.read_csv(path/'adult.csv')

cat_names = ['workclass', 'education', 'marital-status', 'occupation', 'relationship', 'race']

cont_names = ['age', 'fnlwgt', 'education-num']

procs = [Categorify, FillMissing, Normalize]

dls = TabularDataLoaders.from_df(df, path, procs=procs, cat_names=cat_names, cont_names=cont_names,

y_names="salary", valid_idx=list(range(800,1000)), bs=64)

learn = tabular_learner(dls)

#hide

tst = learn.predict(df.iloc[0])

#hide

#test y_range is passed

learn = tabular_learner(dls, y_range=(0,32))

assert isinstance(learn.model.layers[-1], SigmoidRange)

test_eq(learn.model.layers[-1].low, 0)

test_eq(learn.model.layers[-1].high, 32)

learn = tabular_learner(dls, config = tabular_config(y_range=(0,32)))

assert isinstance(learn.model.layers[-1], SigmoidRange)

test_eq(learn.model.layers[-1].low, 0)

test_eq(learn.model.layers[-1].high, 32)

#export

@typedispatch

def show_results(x:Tabular, y:Tabular, samples, outs, ctxs=None, max_n=10, **kwargs):

df = x.all_cols[:max_n]

for n in x.y_names: df[n+'_pred'] = y[n][:max_n].values

display_df(df)

```

## Export -

```

#hide

from nbdev.export import notebook2script

notebook2script()

```

| true |

code

| 0.704262 | null | null | null | null |

|

# Aerospike Connect for Spark - SparkML Prediction Model Tutorial

## Tested with Java 8, Spark 3.0.0, Python 3.7, and Aerospike Spark Connector 3.0.0

## Summary

Build a linear regression model to predict birth weight using Aerospike Database and Spark.

Here are the features used:

- gestation weeks

- mother’s age

- father’s age

- mother’s weight gain during pregnancy

- [Apgar score](https://en.wikipedia.org/wiki/Apgar_score)

Aerospike is used to store the Natality dataset that is published by CDC. The table is accessed in Apache Spark using the Aerospike Spark Connector, and Spark ML is used to build and evaluate the model. The model can later be converted to PMML and deployed on your inference server for predictions.

### Prerequisites

1. Load Aerospike server if not alrady available - docker run -d --name aerospike -p 3000:3000 -p 3001:3001 -p 3002:3002 -p 3003:3003 aerospike

2. Feature key needs to be located in AS_FEATURE_KEY_PATH

3. [Download the connector](https://www.aerospike.com/enterprise/download/connectors/aerospike-spark/3.0.0/)

```

#IP Address or DNS name for one host in your Aerospike cluster.

#A seed address for the Aerospike database cluster is required

AS_HOST ="127.0.0.1"

# Name of one of your namespaces. Type 'show namespaces' at the aql prompt if you are not sure

AS_NAMESPACE = "test"

AS_FEATURE_KEY_PATH = "/etc/aerospike/features.conf"

AEROSPIKE_SPARK_JAR_VERSION="3.0.0"

AS_PORT = 3000 # Usually 3000, but change here if not

AS_CONNECTION_STRING = AS_HOST + ":"+ str(AS_PORT)

#Locate the Spark installation - this'll use the SPARK_HOME environment variable

import findspark

findspark.init()

# Below will help you download the Spark Connector Jar if you haven't done so already.

import urllib

import os

def aerospike_spark_jar_download_url(version=AEROSPIKE_SPARK_JAR_VERSION):

DOWNLOAD_PREFIX="https://www.aerospike.com/enterprise/download/connectors/aerospike-spark/"

DOWNLOAD_SUFFIX="/artifact/jar"

AEROSPIKE_SPARK_JAR_DOWNLOAD_URL = DOWNLOAD_PREFIX+AEROSPIKE_SPARK_JAR_VERSION+DOWNLOAD_SUFFIX

return AEROSPIKE_SPARK_JAR_DOWNLOAD_URL

def download_aerospike_spark_jar(version=AEROSPIKE_SPARK_JAR_VERSION):

JAR_NAME="aerospike-spark-assembly-"+AEROSPIKE_SPARK_JAR_VERSION+".jar"

if(not(os.path.exists(JAR_NAME))) :

urllib.request.urlretrieve(aerospike_spark_jar_download_url(),JAR_NAME)

else :

print(JAR_NAME+" already downloaded")

return os.path.join(os.getcwd(),JAR_NAME)

AEROSPIKE_JAR_PATH=download_aerospike_spark_jar()

os.environ["PYSPARK_SUBMIT_ARGS"] = '--jars ' + AEROSPIKE_JAR_PATH + ' pyspark-shell'

import pyspark

from pyspark.context import SparkContext

from pyspark.sql.context import SQLContext

from pyspark.sql.session import SparkSession

from pyspark.ml.linalg import Vectors

from pyspark.ml.regression import LinearRegression

from pyspark.sql.types import StringType, StructField, StructType, ArrayType, IntegerType, MapType, LongType, DoubleType

#Get a spark session object and set required Aerospike configuration properties

sc = SparkContext.getOrCreate()

print("Spark Verison:", sc.version)

spark = SparkSession(sc)

sqlContext = SQLContext(sc)

spark.conf.set("aerospike.namespace",AS_NAMESPACE)

spark.conf.set("aerospike.seedhost",AS_CONNECTION_STRING)

spark.conf.set("aerospike.keyPath",AS_FEATURE_KEY_PATH )

```

## Step 1: Load Data into a DataFrame

```

as_data=spark \

.read \

.format("aerospike") \

.option("aerospike.set", "natality").load()

as_data.show(5)

print("Inferred Schema along with Metadata.")

as_data.printSchema()

```

### To speed up the load process at scale, use the [knobs](https://www.aerospike.com/docs/connect/processing/spark/performance.html) available in the Aerospike Spark Connector.

For example, **spark.conf.set("aerospike.partition.factor", 15 )** will map 4096 Aerospike partitions to 32K Spark partitions. <font color=red> (Note: Please configure this carefully based on the available resources (CPU threads) in your system.)</font>

## Step 2 - Prep data

```

# This Spark3.0 setting, if true, will turn on Adaptive Query Execution (AQE), which will make use of the

# runtime statistics to choose the most efficient query execution plan. It will speed up any joins that you

# plan to use for data prep step.

spark.conf.set("spark.sql.adaptive.enabled", 'true')

# Run a query in Spark SQL to ensure no NULL values exist.

as_data.createOrReplaceTempView("natality")

sql_query = """

SELECT *

from natality

where weight_pnd is not null

and mother_age is not null

and father_age is not null

and father_age < 80

and gstation_week is not null

and weight_gain_pnd < 90

and apgar_5min != "99"

and apgar_5min != "88"

"""

clean_data = spark.sql(sql_query)

#Drop the Aerospike metadata from the dataset because its not required.

#The metadata is added because we are inferring the schema as opposed to providing a strict schema

columns_to_drop = ['__key','__digest','__expiry','__generation','__ttl' ]

clean_data = clean_data.drop(*columns_to_drop)

# dropping null values

clean_data = clean_data.dropna()

clean_data.cache()

clean_data.show(5)

#Descriptive Analysis of the data

clean_data.describe().toPandas().transpose()

```

## Step 3 Visualize Data

```

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

import math

pdf = clean_data.toPandas()

#Histogram - Father Age

pdf[['father_age']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Fathers Age (years)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

'''

pdf[['mother_age']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Mothers Age (years)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

'''

pdf[['weight_pnd']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Babys Weight (Pounds)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

pdf[['gstation_week']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Gestation (Weeks)',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

pdf[['weight_gain_pnd']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('mother’s weight gain during pregnancy',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

#Histogram - Apgar Score

print("Apgar Score: Scores of 7 and above are generally normal; 4 to 6, fairly low; and 3 and below are generally \

regarded as critically low and cause for immediate resuscitative efforts.")

pdf[['apgar_5min']].plot(kind='hist',bins=10,rwidth=0.8)

plt.xlabel('Apgar score',fontsize=12)

plt.legend(loc=None)

plt.style.use('seaborn-whitegrid')

plt.show()

```

## Step 4 - Create Model

**Steps used for model creation:**

1. Split cleaned data into Training and Test sets

2. Vectorize features on which the model will be trained

3. Create a linear regression model (Choose any ML algorithm that provides the best fit for the given dataset)

4. Train model (Although not shown here, you could use K-fold cross-validation and Grid Search to choose the best hyper-parameters for the model)

5. Evaluate model

```

# Define a function that collects the features of interest

# (mother_age, father_age, and gestation_weeks) into a vector.

# Package the vector in a tuple containing the label (`weight_pounds`) for that

# row.##

def vector_from_inputs(r):

return (r["weight_pnd"], Vectors.dense(float(r["mother_age"]),

float(r["father_age"]),

float(r["gstation_week"]),

float(r["weight_gain_pnd"]),

float(r["apgar_5min"])))

#Split that data 70% training and 30% Evaluation data

train, test = clean_data.randomSplit([0.7, 0.3])

#Check the shape of the data

train.show()

print((train.count(), len(train.columns)))

test.show()

print((test.count(), len(test.columns)))

# Create an input DataFrame for Spark ML using the above function.

training_data = train.rdd.map(vector_from_inputs).toDF(["label",

"features"])

# Construct a new LinearRegression object and fit the training data.

lr = LinearRegression(maxIter=5, regParam=0.2, solver="normal")

#Voila! your first model using Spark ML is trained

model = lr.fit(training_data)

# Print the model summary.

print("Coefficients:" + str(model.coefficients))

print("Intercept:" + str(model.intercept))

print("R^2:" + str(model.summary.r2))

model.summary.residuals.show()

```

### Evaluate Model

```

eval_data = test.rdd.map(vector_from_inputs).toDF(["label",

"features"])

eval_data.show()

evaluation_summary = model.evaluate(eval_data)

print("MAE:", evaluation_summary.meanAbsoluteError)

print("RMSE:", evaluation_summary.rootMeanSquaredError)

print("R-squared value:", evaluation_summary.r2)

```

## Step 5 - Batch Prediction

```

#eval_data contains the records (ideally production) that you'd like to use for the prediction

predictions = model.transform(eval_data)

predictions.show()

```

#### Compare the labels and the predictions, they should ideally match up for an accurate model. Label is the actual weight of the baby and prediction is the predicated weight

### Saving the Predictions to Aerospike for ML Application's consumption

```

# Aerospike is a key/value database, hence a key is needed to store the predictions into the database. Hence we need

# to add the _id column to the predictions using SparkSQL

predictions.createOrReplaceTempView("predict_view")

sql_query = """

SELECT *, monotonically_increasing_id() as _id

from predict_view

"""

predict_df = spark.sql(sql_query)

predict_df.show()

print("#records:", predict_df.count())

# Now we are good to write the Predictions to Aerospike

predict_df \

.write \

.mode('overwrite') \

.format("aerospike") \

.option("aerospike.writeset", "predictions")\

.option("aerospike.updateByKey", "_id") \

.save()

```

#### You can verify that data is written to Aerospike by using either [AQL](https://www.aerospike.com/docs/tools/aql/data_management.html) or the [Aerospike Data Browser](https://github.com/aerospike/aerospike-data-browser)

## Step 6 - Deploy

### Here are a few options:

1. Save the model to a PMML file by converting it using Jpmml/[pyspark2pmml](https://github.com/jpmml/pyspark2pmml) and load it into your production enviornment for inference.

2. Use Aerospike as an [edge database for high velocity ingestion](https://medium.com/aerospike-developer-blog/add-horsepower-to-ai-ml-pipeline-15ca42a10982) for your inference pipline.

| true |

code

| 0.475301 | null | null | null | null |

|

# Classification on Iris dataset with sklearn and DJL

In this notebook, you will try to use a pre-trained sklearn model to run on DJL for a general classification task. The model was trained with [Iris flower dataset](https://en.wikipedia.org/wiki/Iris_flower_data_set).

## Background

### Iris Dataset

The dataset contains a set of 150 records under five attributes - sepal length, sepal width, petal length, petal width and species.

Iris setosa | Iris versicolor | Iris virginica

:-------------------------:|:-------------------------:|:-------------------------:

|  |

The chart above shows three different kinds of the Iris flowers.

We will use sepal length, sepal width, petal length, petal width as the feature and species as the label to train the model.

### Sklearn Model

You can find more information [here](http://onnx.ai/sklearn-onnx/). You can use the sklearn built-in iris dataset to load the data. Then we defined a [RandomForestClassifer](https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.RandomForestClassifier.html) to train the model. After that, we convert the model to onnx format for DJL to run inference. The following code is a sample classification setup using sklearn:

```python

# Train a model.

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

iris = load_iris()

X, y = iris.data, iris.target

X_train, X_test, y_train, y_test = train_test_split(X, y)

clr = RandomForestClassifier()

clr.fit(X_train, y_train)

```

## Preparation

This tutorial requires the installation of Java Kernel. To install the Java Kernel, see the [README](https://github.com/awslabs/djl/blob/master/jupyter/README.md).

These are dependencies we will use. To enhance the NDArray operation capability, we are importing ONNX Runtime and PyTorch Engine at the same time. Please find more information [here](https://github.com/awslabs/djl/blob/master/docs/onnxruntime/hybrid_engine.md#hybrid-engine-for-onnx-runtime).

```

// %mavenRepo snapshots https://oss.sonatype.org/content/repositories/snapshots/

%maven ai.djl:api:0.8.0

%maven ai.djl.onnxruntime:onnxruntime-engine:0.8.0

%maven ai.djl.pytorch:pytorch-engine:0.8.0

%maven org.slf4j:slf4j-api:1.7.26

%maven org.slf4j:slf4j-simple:1.7.26

%maven com.microsoft.onnxruntime:onnxruntime:1.4.0

%maven ai.djl.pytorch:pytorch-native-auto:1.6.0

import ai.djl.inference.*;

import ai.djl.modality.*;

import ai.djl.ndarray.*;

import ai.djl.ndarray.types.*;

import ai.djl.repository.zoo.*;

import ai.djl.translate.*;

import java.util.*;

```

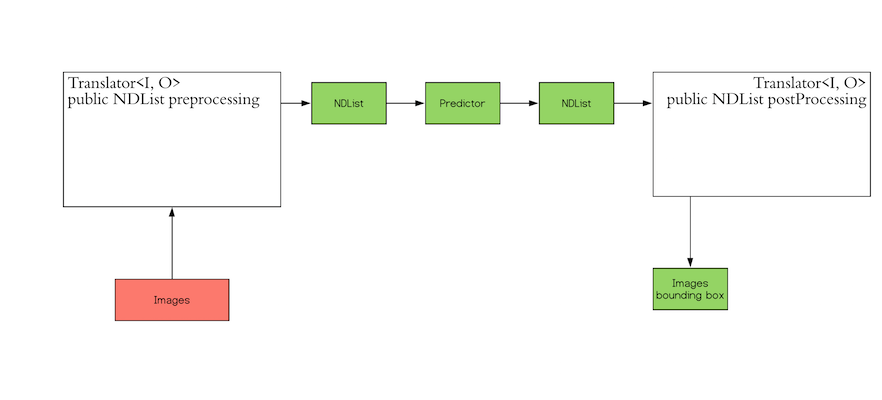

## Step 1 create a Translator

Inference in machine learning is the process of predicting the output for a given input based on a pre-defined model.

DJL abstracts away the whole process for ease of use. It can load the model, perform inference on the input, and provide

output. DJL also allows you to provide user-defined inputs. The workflow looks like the following:

The `Translator` interface encompasses the two white blocks: Pre-processing and Post-processing. The pre-processing

component converts the user-defined input objects into an NDList, so that the `Predictor` in DJL can understand the

input and make its prediction. Similarly, the post-processing block receives an NDList as the output from the

`Predictor`. The post-processing block allows you to convert the output from the `Predictor` to the desired output

format.

In our use case, we use a class namely `IrisFlower` as our input class type. We will use [`Classifications`](https://javadoc.io/doc/ai.djl/api/latest/ai/djl/modality/Classifications.html) as our output class type.

```

public static class IrisFlower {

public float sepalLength;

public float sepalWidth;

public float petalLength;

public float petalWidth;

public IrisFlower(float sepalLength, float sepalWidth, float petalLength, float petalWidth) {

this.sepalLength = sepalLength;

this.sepalWidth = sepalWidth;

this.petalLength = petalLength;

this.petalWidth = petalWidth;

}

}

```

Let's create a translator

```

public static class MyTranslator implements Translator<IrisFlower, Classifications> {

private final List<String> synset;

public MyTranslator() {

// species name

synset = Arrays.asList("setosa", "versicolor", "virginica");

}

@Override

public NDList processInput(TranslatorContext ctx, IrisFlower input) {

float[] data = {input.sepalLength, input.sepalWidth, input.petalLength, input.petalWidth};

NDArray array = ctx.getNDManager().create(data, new Shape(1, 4));

return new NDList(array);

}

@Override

public Classifications processOutput(TranslatorContext ctx, NDList list) {

return new Classifications(synset, list.get(1));

}

@Override

public Batchifier getBatchifier() {

return null;

}

}

```

## Step 2 Prepare your model

We will load a pretrained sklearn model into DJL. We defined a [`ModelZoo`](https://javadoc.io/doc/ai.djl/api/latest/ai/djl/repository/zoo/ModelZoo.html) concept to allow user load model from varity of locations, such as remote URL, local files or DJL pretrained model zoo. We need to define `Criteria` class to help the modelzoo locate the model and attach translator. In this example, we download a compressed ONNX model from S3.

```

String modelUrl = "https://mlrepo.djl.ai/model/tabular/random_forest/ai/djl/onnxruntime/iris_flowers/0.0.1/iris_flowers.zip";

Criteria<IrisFlower, Classifications> criteria = Criteria.builder()

.setTypes(IrisFlower.class, Classifications.class)

.optModelUrls(modelUrl)

.optTranslator(new MyTranslator())

.optEngine("OnnxRuntime") // use OnnxRuntime engine by default

.build();

ZooModel<IrisFlower, Classifications> model = ModelZoo.loadModel(criteria);

```

## Step 3 Run inference

User will just need to create a `Predictor` from model to run the inference.

```

Predictor<IrisFlower, Classifications> predictor = model.newPredictor();

IrisFlower info = new IrisFlower(1.0f, 2.0f, 3.0f, 4.0f);

predictor.predict(info);

```

| true |

code

| 0.782642 | null | null | null | null |

|

<a href="https://colab.research.google.com/github/satyajitghana/TSAI-DeepNLP-END2.0/blob/main/09_NLP_Evaluation/ClassificationEvaluation.ipynb" target="_parent"><img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/></a>

```

! pip3 install git+https://github.com/extensive-nlp/ttc_nlp --quiet

! pip3 install torchmetrics --quiet

from ttctext.datamodules.sst import SSTDataModule

from ttctext.datasets.sst import StanfordSentimentTreeBank

sst_dataset = SSTDataModule(batch_size=128)

sst_dataset.setup()

import pytorch_lightning as pl

import torch

import torch.nn as nn

import torch.nn.functional as F

from torchmetrics.functional import accuracy, precision, recall, confusion_matrix

from sklearn.metrics import classification_report

import matplotlib.pyplot as plt

import seaborn as sns

import pandas as pd

sns.set()

class SSTModel(pl.LightningModule):

def __init__(self, hparams, *args, **kwargs):

super().__init__()

self.save_hyperparameters(hparams)

self.num_classes = self.hparams.output_dim

self.embedding = nn.Embedding(self.hparams.input_dim, self.hparams.embedding_dim)

self.lstm = nn.LSTM(

self.hparams.embedding_dim,

self.hparams.hidden_dim,

num_layers=self.hparams.num_layers,

dropout=self.hparams.dropout,

batch_first=True

)

self.proj_layer = nn.Sequential(

nn.Linear(self.hparams.hidden_dim, self.hparams.hidden_dim),

nn.BatchNorm1d(self.hparams.hidden_dim),

nn.ReLU(),

nn.Dropout(self.hparams.dropout),

)

self.fc = nn.Linear(self.hparams.hidden_dim, self.num_classes)

self.loss = nn.CrossEntropyLoss()

def init_state(self, sequence_length):

return (torch.zeros(self.hparams.num_layers, sequence_length, self.hparams.hidden_dim).to(self.device),

torch.zeros(self.hparams.num_layers, sequence_length, self.hparams.hidden_dim).to(self.device))

def forward(self, text, text_length, prev_state=None):

# [batch size, sentence length] => [batch size, sentence len, embedding size]

embedded = self.embedding(text)

# packs the input for faster forward pass in RNN

packed = torch.nn.utils.rnn.pack_padded_sequence(

embedded, text_length.to('cpu'),

enforce_sorted=False,

batch_first=True

)

# [batch size sentence len, embedding size] =>

# output: [batch size, sentence len, hidden size]

# hidden: [batch size, 1, hidden size]

packed_output, curr_state = self.lstm(packed, prev_state)

hidden_state, cell_state = curr_state

# print('hidden state shape: ', hidden_state.shape)

# print('cell')

# unpack packed sequence

# unpacked, unpacked_len = torch.nn.utils.rnn.pad_packed_sequence(packed_output, batch_first=True)

# print('unpacked: ', unpacked.shape)

# [batch size, sentence len, hidden size] => [batch size, num classes]

# output = self.proj_layer(unpacked[:, -1])

output = self.proj_layer(hidden_state[-1])

# print('output shape: ', output.shape)

output = self.fc(output)

return output, curr_state

def shared_step(self, batch, batch_idx):

label, text, text_length = batch

logits, in_state = self(text, text_length)

loss = self.loss(logits, label)

pred = torch.argmax(F.log_softmax(logits, dim=1), dim=1)

acc = accuracy(pred, label)

metric = {'loss': loss, 'acc': acc, 'pred': pred, 'label': label}

return metric

def training_step(self, batch, batch_idx):

metrics = self.shared_step(batch, batch_idx)

log_metrics = {'train_loss': metrics['loss'], 'train_acc': metrics['acc']}

self.log_dict(log_metrics, prog_bar=True)

return metrics

def validation_step(self, batch, batch_idx):

metrics = self.shared_step(batch, batch_idx)

return metrics

def validation_epoch_end(self, outputs):

acc = torch.stack([x['acc'] for x in outputs]).mean()

loss = torch.stack([x['loss'] for x in outputs]).mean()

log_metrics = {'val_loss': loss, 'val_acc': acc}

self.log_dict(log_metrics, prog_bar=True)

if self.trainer.sanity_checking:

return log_metrics

preds = torch.cat([x['pred'] for x in outputs]).view(-1)

labels = torch.cat([x['label'] for x in outputs]).view(-1)

accuracy_ = accuracy(preds, labels)

precision_ = precision(preds, labels, average='macro', num_classes=self.num_classes)

recall_ = recall(preds, labels, average='macro', num_classes=self.num_classes)

classification_report_ = classification_report(labels.cpu().numpy(), preds.cpu().numpy(), target_names=self.hparams.class_labels)

confusion_matrix_ = confusion_matrix(preds, labels, num_classes=self.num_classes)

cm_df = pd.DataFrame(confusion_matrix_.cpu().numpy(), index=self.hparams.class_labels, columns=self.hparams.class_labels)

print(f'Test Epoch {self.current_epoch}/{self.hparams.epochs-1}: F1 Score: {accuracy_:.5f}, Precision: {precision_:.5f}, Recall: {recall_:.5f}\n')

print(f'Classification Report\n{classification_report_}')

fig, ax = plt.subplots(figsize=(10, 8))

heatmap = sns.heatmap(cm_df, annot=True, ax=ax, fmt='d') # font size

locs, labels = plt.xticks()

plt.setp(labels, rotation=45)

locs, labels = plt.yticks()

plt.setp(labels, rotation=45)

plt.show()

print("\n")

return log_metrics

def test_step(self, batch, batch_idx):

return self.validation_step(batch, batch_idx)

def test_epoch_end(self, outputs):

accuracy = torch.stack([x['acc'] for x in outputs]).mean()

self.log('hp_metric', accuracy)

self.log_dict({'test_acc': accuracy}, prog_bar=True)

def configure_optimizers(self):

optimizer = torch.optim.Adam(self.parameters(), lr=self.hparams.lr)

lr_scheduler = {

'scheduler': torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, patience=10, verbose=True),

'monitor': 'train_loss',

'name': 'scheduler'

}

return [optimizer], [lr_scheduler]

from omegaconf import OmegaConf

hparams = OmegaConf.create({

'input_dim': len(sst_dataset.get_vocab()),

'embedding_dim': 128,

'num_layers': 2,

'hidden_dim': 64,

'dropout': 0.5,

'output_dim': len(StanfordSentimentTreeBank.get_labels()),

'class_labels': sst_dataset.raw_dataset_train.get_labels(),

'lr': 5e-4,

'epochs': 10,

'use_lr_finder': False

})

sst_model = SSTModel(hparams)

trainer = pl.Trainer(gpus=1, max_epochs=hparams.epochs, progress_bar_refresh_rate=1, reload_dataloaders_every_epoch=True)

trainer.fit(sst_model, sst_dataset)

```

| true |

code

| 0.862265 | null | null | null | null |

|

## Accessing TerraClimate data with the Planetary Computer STAC API

[TerraClimate](http://www.climatologylab.org/terraclimate.html) is a dataset of monthly climate and climatic water balance for global terrestrial surfaces from 1958-2019. These data provide important inputs for ecological and hydrological studies at global scales that require high spatial resolution and time-varying data. All data have monthly temporal resolution and a ~4-km (1/24th degree) spatial resolution. The data cover the period from 1958-2019.

This example will show you how temperature has increased over the past 60 years across the globe.

### Environment setup

```

import warnings

warnings.filterwarnings("ignore", "invalid value", RuntimeWarning)

```

### Data access

https://planetarycomputer.microsoft.com/api/stac/v1/collections/terraclimate is a STAC Collection with links to all the metadata about this dataset. We'll load it with [PySTAC](https://pystac.readthedocs.io/en/latest/).

```

import pystac

url = "https://planetarycomputer.microsoft.com/api/stac/v1/collections/terraclimate"

collection = pystac.read_file(url)

collection

```

The collection contains assets, which are links to the root of a Zarr store, which can be opened with xarray.

```

asset = collection.assets["zarr-https"]

asset

import fsspec

import xarray as xr

store = fsspec.get_mapper(asset.href)

ds = xr.open_zarr(store, **asset.extra_fields["xarray:open_kwargs"])

ds

```

We'll process the data in parallel using [Dask](https://dask.org).

```

from dask_gateway import GatewayCluster

cluster = GatewayCluster()

cluster.scale(16)

client = cluster.get_client()

print(cluster.dashboard_link)

```

The link printed out above can be opened in a new tab or the [Dask labextension](https://github.com/dask/dask-labextension). See [Scale with Dask](https://planetarycomputer.microsoft.com/docs/quickstarts/scale-with-dask/) for more on using Dask, and how to access the Dashboard.

### Analyze and plot global temperature

We can quickly plot a map of one of the variables. In this case, we are downsampling (coarsening) the dataset for easier plotting.

```

import cartopy.crs as ccrs

import matplotlib.pyplot as plt

average_max_temp = ds.isel(time=-1)["tmax"].coarsen(lat=8, lon=8).mean().load()

fig, ax = plt.subplots(figsize=(20, 10), subplot_kw=dict(projection=ccrs.Robinson()))

average_max_temp.plot(ax=ax, transform=ccrs.PlateCarree())

ax.coastlines();

```

Let's see how temperature has changed over the observational record, when averaged across the entire domain. Since we'll do some other calculations below we'll also add `.load()` to execute the command instead of specifying it lazily. Note that there are some data quality issues before 1965 so we'll start our analysis there.

```

temperature = (

ds["tmax"].sel(time=slice("1965", None)).mean(dim=["lat", "lon"]).persist()

)

temperature.plot(figsize=(12, 6));

```

With all the seasonal fluctuations (from summer and winter) though, it can be hard to see any obvious trends. So let's try grouping by year and plotting that timeseries.

```

temperature.groupby("time.year").mean().plot(figsize=(12, 6));

```

Now the increase in temperature is obvious, even when averaged across the entire domain.

Now, let's see how those changes are different in different parts of the world. And let's focus just on summer months in the northern hemisphere, when it's hottest. Let's take a climatological slice at the beginning of the period and the same at the end of the period, calculate the difference, and map it to see how different parts of the world have changed differently.

First we'll just grab the summer months.

```

%%time

import dask

summer_months = [6, 7, 8]

summer = ds.tmax.where(ds.time.dt.month.isin(summer_months), drop=True)

early_period = slice("1958-01-01", "1988-12-31")

late_period = slice("1988-01-01", "2018-12-31")

early, late = dask.compute(

summer.sel(time=early_period).mean(dim="time"),

summer.sel(time=late_period).mean(dim="time"),

)

increase = (late - early).coarsen(lat=8, lon=8).mean()

fig, ax = plt.subplots(figsize=(20, 10), subplot_kw=dict(projection=ccrs.Robinson()))

increase.plot(ax=ax, transform=ccrs.PlateCarree(), robust=True)

ax.coastlines();

```

This shows us that changes in summer temperature haven't been felt equally around the globe. Note the enhanced warming in the polar regions, a phenomenon known as "Arctic amplification".

| true |

code

| 0.609059 | null | null | null | null |

|

```

import numpy as np

import matplotlib.pyplot as plt

import numba

from tqdm import tqdm

import eitest

```

# Data generators

```

@numba.njit

def event_series_bernoulli(series_length, event_count):

'''Generate an iid Bernoulli distributed event series.

series_length: length of the event series

event_count: number of events'''

event_series = np.zeros(series_length)

event_series[np.random.choice(np.arange(0, series_length), event_count, replace=False)] = 1

return event_series

@numba.njit

def time_series_mean_impact(event_series, order, signal_to_noise):

'''Generate a time series with impacts in mean as described in the paper.

The impact weights are sampled iid from N(0, signal_to_noise),

and additional noise is sampled iid from N(0,1). The detection problem will

be harder than in time_series_meanconst_impact for small orders, as for small

orders we have a low probability to sample at least one impact weight with a

high magnitude. On the other hand, since the impact is different at every lag,

we can detect the impacts even if the order is larger than the max_lag value

used in the test.

event_series: input of shape (T,) with event occurrences

order: order of the event impacts

signal_to_noise: signal to noise ratio of the event impacts'''

series_length = len(event_series)

weights = np.random.randn(order)*np.sqrt(signal_to_noise)

time_series = np.random.randn(series_length)

for t in range(series_length):

if event_series[t] == 1:

time_series[t+1:t+order+1] += weights[:order-max(0, (t+order+1)-series_length)]

return time_series

@numba.njit

def time_series_meanconst_impact(event_series, order, const):

'''Generate a time series with impacts in mean by adding a constant.

Better for comparing performance across different impact orders, since the

magnitude of the impact will always be the same.

event_series: input of shape (T,) with event occurrences

order: order of the event impacts

const: constant for mean shift'''

series_length = len(event_series)

time_series = np.random.randn(series_length)

for t in range(series_length):

if event_series[t] == 1:

time_series[t+1:t+order+1] += const

return time_series

@numba.njit

def time_series_var_impact(event_series, order, variance):

'''Generate a time series with impacts in variance as described in the paper.

event_series: input of shape (T,) with event occurrences

order: order of the event impacts

variance: variance under event impacts'''

series_length = len(event_series)

time_series = np.random.randn(series_length)

for t in range(series_length):

if event_series[t] == 1:

for tt in range(t+1, min(series_length, t+order+1)):

time_series[tt] = np.random.randn()*np.sqrt(variance)

return time_series

@numba.njit

def time_series_tail_impact(event_series, order, dof):

'''Generate a time series with impacts in tails as described in the paper.

event_series: input of shape (T,) with event occurrences

order: delay of the event impacts

dof: degrees of freedom of the t distribution'''

series_length = len(event_series)

time_series = np.random.randn(series_length)*np.sqrt(dof/(dof-2))

for t in range(series_length):

if event_series[t] == 1:

for tt in range(t+1, min(series_length, t+order+1)):

time_series[tt] = np.random.standard_t(dof)

return time_series

```

# Visualization of the impact models

```

default_T = 8192

default_N = 64

default_q = 4

es = event_series_bernoulli(default_T, default_N)

for ts in [

time_series_mean_impact(es, order=default_q, signal_to_noise=10.),

time_series_meanconst_impact(es, order=default_q, const=5.),

time_series_var_impact(es, order=default_q, variance=4.),

time_series_tail_impact(es, order=default_q, dof=3.),

]:

fig, (ax1, ax2) = plt.subplots(1, 2, gridspec_kw={'width_ratios': [2, 1]}, figsize=(15, 2))

ax1.plot(ts)

ax1.plot(es*np.max(ts), alpha=0.5)

ax1.set_xlim(0, len(es))

samples = eitest.obtain_samples(es, ts, method='eager', lag_cutoff=15, instantaneous=True)

eitest.plot_samples(samples, ax2)

plt.show()

```

# Simulations

```

def test_simul_pairs(impact_model, param_T, param_N, param_q, param_r,

n_pairs, lag_cutoff, instantaneous, sample_method,

twosamp_test, multi_test, alpha):

true_positive = 0.

false_positive = 0.

for _ in tqdm(range(n_pairs)):

es = event_series_bernoulli(param_T, param_N)

if impact_model == 'mean':

ts = time_series_mean_impact(es, param_q, param_r)

elif impact_model == 'meanconst':

ts = time_series_meanconst_impact(es, param_q, param_r)

elif impact_model == 'var':

ts = time_series_var_impact(es, param_q, param_r)

elif impact_model == 'tail':

ts = time_series_tail_impact(es, param_q, param_r)

else:

raise ValueError('impact_model must be "mean", "meanconst", "var" or "tail"')

# coupled pair

samples = eitest.obtain_samples(es, ts, lag_cutoff=lag_cutoff,

method=sample_method,

instantaneous=instantaneous,

sort=(twosamp_test == 'ks')) # samples need to be sorted for K-S test

tstats, pvals = eitest.pairwise_twosample_tests(samples, twosamp_test, min_pts=2)

pvals_adj = eitest.multitest(np.sort(pvals[~np.isnan(pvals)]), multi_test)

true_positive += (pvals_adj.min() < alpha)

# uncoupled pair

samples = eitest.obtain_samples(np.random.permutation(es), ts, lag_cutoff=lag_cutoff,

method=sample_method,

instantaneous=instantaneous,

sort=(twosamp_test == 'ks'))

tstats, pvals = eitest.pairwise_twosample_tests(samples, twosamp_test, min_pts=2)

pvals_adj = eitest.multitest(np.sort(pvals[~np.isnan(pvals)]), multi_test)

false_positive += (pvals_adj.min() < alpha)

return true_positive/n_pairs, false_positive/n_pairs

# global parameters

default_T = 8192

n_pairs = 100

alpha = 0.05

twosamp_test = 'ks'

multi_test = 'simes'

sample_method = 'lazy'

lag_cutoff = 32

instantaneous = True

```

## Mean impact model

```

default_N = 64

default_r = 1.

default_q = 4

```

### ... by number of events

```

vals = [4, 8, 16, 32, 64, 128, 256]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='mean', param_T=default_T,

param_N=val, param_q=default_q, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_N, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# mean impact model (T={default_T}, q={default_q}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# N\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by impact order

```

vals = [1, 2, 4, 8, 16, 32]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='mean', param_T=default_T,

param_N=default_N, param_q=val, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_q, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# mean impact model (T={default_T}, N={default_N}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# q\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by signal-to-noise ratio

```

vals = [1./32, 1./16, 1./8, 1./4, 1./2, 1., 2., 4.]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='mean', param_T=default_T,

param_N=default_N, param_q=default_q, param_r=val,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_r, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# mean impact model (T={default_T}, N={default_N}, q={default_q}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# r\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

```

## Meanconst impact model

```

default_N = 64

default_r = 0.5

default_q = 4

```

### ... by number of events

```

vals = [4, 8, 16, 32, 64, 128, 256]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='meanconst', param_T=default_T,

param_N=val, param_q=default_q, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_N, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# meanconst impact model (T={default_T}, q={default_q}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# N\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by impact order

```

vals = [1, 2, 4, 8, 16, 32]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='meanconst', param_T=default_T,

param_N=default_N, param_q=val, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_q, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# meanconst impact model (T={default_T}, N={default_N}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# q\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by mean value

```

vals = [0.125, 0.25, 0.5, 1, 2]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='meanconst', param_T=default_T,

param_N=default_N, param_q=default_q, param_r=val,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_r, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# meanconst impact model (T={default_T}, N={default_N}, q={default_q}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# r\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

## Variance impact model

In the paper, we show results with the variance impact model parametrized by the **variance increase**. Here we directly modulate the variance.

```

default_N = 64

default_r = 8.

default_q = 4

```

### ... by number of events

```

vals = [4, 8, 16, 32, 64, 128, 256]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='var', param_T=default_T,

param_N=val, param_q=default_q, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_N, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# var impact model (T={default_T}, q={default_q}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# N\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by impact order

```

vals = [1, 2, 4, 8, 16, 32]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='var', param_T=default_T,

param_N=default_N, param_q=val, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_q, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# var impact model (T={default_T}, N={default_N}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# q\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by variance

```

vals = [2., 4., 8., 16., 32.]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='var', param_T=default_T,

param_N=default_N, param_q=default_q, param_r=val,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_r, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# var impact model (T={default_T}, N={default_N}, q={default_q}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# r\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

## Tail impact model

```

default_N = 512

default_r = 3.

default_q = 4

```

### ... by number of events

```

vals = [64, 128, 256, 512, 1024]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='tail', param_T=default_T,

param_N=val, param_q=default_q, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_N, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# tail impact model (T={default_T}, q={default_q}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# N\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by impact order

```

vals = [1, 2, 4, 8, 16, 32]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='tail', param_T=default_T,

param_N=default_N, param_q=val, param_r=default_r,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_q, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.gca().set_xscale('log', base=2)

plt.legend()

plt.show()

print(f'# tail impact model (T={default_T}, N={default_N}, r={default_r}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# q\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

### ... by degrees of freedom

```

vals = [2.5, 3., 3.5, 4., 4.5, 5., 5.5, 6.]

tprs = np.empty(len(vals))

fprs = np.empty(len(vals))

for i, val in enumerate(vals):

tprs[i], fprs[i] = test_simul_pairs(impact_model='tail', param_T=default_T,

param_N=default_N, param_q=default_q, param_r=val,

n_pairs=n_pairs, sample_method=sample_method,

lag_cutoff=lag_cutoff, instantaneous=instantaneous,

twosamp_test=twosamp_test, multi_test=multi_test, alpha=alpha)

plt.figure(figsize=(3,3))

plt.axvline(default_r, ls='-', c='gray', lw=1, label='def')

plt.axhline(alpha, ls='--', c='black', lw=1, label='alpha')

plt.plot(vals, tprs, label='TPR', marker='x')

plt.plot(vals, fprs, label='FPR', marker='x')

plt.legend()

plt.show()

print(f'# tail impact model (T={default_T}, N={default_N}, q={default_q}, n_pairs={n_pairs}, cutoff={lag_cutoff}, instantaneous={instantaneous}, alpha={alpha}, {sample_method}-{twosamp_test}-{multi_test})')

print(f'# r\ttpr\tfpr')

for i, (tpr, fpr) in enumerate(zip(tprs, fprs)):

print(f'{vals[i]}\t{tpr}\t{fpr}')

print()

```

| true |

code

| 0.687079 | null | null | null | null |

|

# Chapter 4

`Original content created by Cam Davidson-Pilon`

`Ported to Python 3 and PyMC3 by Max Margenot (@clean_utensils) and Thomas Wiecki (@twiecki) at Quantopian (@quantopian)`

______

## The greatest theorem never told

This chapter focuses on an idea that is always bouncing around our minds, but is rarely made explicit outside books devoted to statistics. In fact, we've been using this simple idea in every example thus far.

### The Law of Large Numbers

Let $Z_i$ be $N$ independent samples from some probability distribution. According to *the Law of Large numbers*, so long as the expected value $E[Z]$ is finite, the following holds,

$$\frac{1}{N} \sum_{i=1}^N Z_i \rightarrow E[ Z ], \;\;\; N \rightarrow \infty.$$

In words:

> The average of a sequence of random variables from the same distribution converges to the expected value of that distribution.

This may seem like a boring result, but it will be the most useful tool you use.

### Intuition

If the above Law is somewhat surprising, it can be made more clear by examining a simple example.

Consider a random variable $Z$ that can take only two values, $c_1$ and $c_2$. Suppose we have a large number of samples of $Z$, denoting a specific sample $Z_i$. The Law says that we can approximate the expected value of $Z$ by averaging over all samples. Consider the average:

$$ \frac{1}{N} \sum_{i=1}^N \;Z_i $$

By construction, $Z_i$ can only take on $c_1$ or $c_2$, hence we can partition the sum over these two values:

\begin{align}

\frac{1}{N} \sum_{i=1}^N \;Z_i

& =\frac{1}{N} \big( \sum_{ Z_i = c_1}c_1 + \sum_{Z_i=c_2}c_2 \big) \\\\[5pt]

& = c_1 \sum_{ Z_i = c_1}\frac{1}{N} + c_2 \sum_{ Z_i = c_2}\frac{1}{N} \\\\[5pt]

& = c_1 \times \text{ (approximate frequency of $c_1$) } \\\\

& \;\;\;\;\;\;\;\;\; + c_2 \times \text{ (approximate frequency of $c_2$) } \\\\[5pt]

& \approx c_1 \times P(Z = c_1) + c_2 \times P(Z = c_2 ) \\\\[5pt]

& = E[Z]

\end{align}

Equality holds in the limit, but we can get closer and closer by using more and more samples in the average. This Law holds for almost *any distribution*, minus some important cases we will encounter later.

##### Example

____

Below is a diagram of the Law of Large numbers in action for three different sequences of Poisson random variables.

We sample `sample_size = 100000` Poisson random variables with parameter $\lambda = 4.5$. (Recall the expected value of a Poisson random variable is equal to it's parameter.) We calculate the average for the first $n$ samples, for $n=1$ to `sample_size`.

```

%matplotlib inline

import numpy as np

from IPython.core.pylabtools import figsize

import matplotlib.pyplot as plt

figsize( 12.5, 5 )

sample_size = 100000

expected_value = lambda_ = 4.5

poi = np.random.poisson

N_samples = range(1,sample_size,100)

for k in range(3):

samples = poi( lambda_, sample_size )

partial_average = [ samples[:i].mean() for i in N_samples ]

plt.plot( N_samples, partial_average, lw=1.5,label="average \

of $n$ samples; seq. %d"%k)

plt.plot( N_samples, expected_value*np.ones_like( partial_average),

ls = "--", label = "true expected value", c = "k" )

plt.ylim( 4.35, 4.65)

plt.title( "Convergence of the average of \n random variables to its \

expected value" )

plt.ylabel( "average of $n$ samples" )

plt.xlabel( "# of samples, $n$")

plt.legend();

```

Looking at the above plot, it is clear that when the sample size is small, there is greater variation in the average (compare how *jagged and jumpy* the average is initially, then *smooths* out). All three paths *approach* the value 4.5, but just flirt with it as $N$ gets large. Mathematicians and statistician have another name for *flirting*: convergence.

Another very relevant question we can ask is *how quickly am I converging to the expected value?* Let's plot something new. For a specific $N$, let's do the above trials thousands of times and compute how far away we are from the true expected value, on average. But wait — *compute on average*? This is simply the law of large numbers again! For example, we are interested in, for a specific $N$, the quantity:

$$D(N) = \sqrt{ \;E\left[\;\; \left( \frac{1}{N}\sum_{i=1}^NZ_i - 4.5 \;\right)^2 \;\;\right] \;\;}$$

The above formulae is interpretable as a distance away from the true value (on average), for some $N$. (We take the square root so the dimensions of the above quantity and our random variables are the same). As the above is an expected value, it can be approximated using the law of large numbers: instead of averaging $Z_i$, we calculate the following multiple times and average them:

$$ Y_k = \left( \;\frac{1}{N}\sum_{i=1}^NZ_i - 4.5 \; \right)^2 $$

By computing the above many, $N_y$, times (remember, it is random), and averaging them:

$$ \frac{1}{N_Y} \sum_{k=1}^{N_Y} Y_k \rightarrow E[ Y_k ] = E\;\left[\;\; \left( \frac{1}{N}\sum_{i=1}^NZ_i - 4.5 \;\right)^2 \right]$$

Finally, taking the square root:

$$ \sqrt{\frac{1}{N_Y} \sum_{k=1}^{N_Y} Y_k} \approx D(N) $$

```

figsize( 12.5, 4)

N_Y = 250 #use this many to approximate D(N)

N_array = np.arange( 1000, 50000, 2500 ) #use this many samples in the approx. to the variance.

D_N_results = np.zeros( len( N_array ) )

lambda_ = 4.5

expected_value = lambda_ #for X ~ Poi(lambda) , E[ X ] = lambda

def D_N( n ):

"""

This function approx. D_n, the average variance of using n samples.

"""

Z = poi( lambda_, (n, N_Y) )

average_Z = Z.mean(axis=0)

return np.sqrt( ( (average_Z - expected_value)**2 ).mean() )

for i,n in enumerate(N_array):

D_N_results[i] = D_N(n)

plt.xlabel( "$N$" )

plt.ylabel( "expected squared-distance from true value" )

plt.plot(N_array, D_N_results, lw = 3,

label="expected distance between\n\

expected value and \naverage of $N$ random variables.")

plt.plot( N_array, np.sqrt(expected_value)/np.sqrt(N_array), lw = 2, ls = "--",

label = r"$\frac{\sqrt{\lambda}}{\sqrt{N}}$" )

plt.legend()

plt.title( "How 'fast' is the sample average converging? " );

```

As expected, the expected distance between our sample average and the actual expected value shrinks as $N$ grows large. But also notice that the *rate* of convergence decreases, that is, we need only 10 000 additional samples to move from 0.020 to 0.015, a difference of 0.005, but *20 000* more samples to again decrease from 0.015 to 0.010, again only a 0.005 decrease.

It turns out we can measure this rate of convergence. Above I have plotted a second line, the function $\sqrt{\lambda}/\sqrt{N}$. This was not chosen arbitrarily. In most cases, given a sequence of random variable distributed like $Z$, the rate of convergence to $E[Z]$ of the Law of Large Numbers is

$$ \frac{ \sqrt{ \; Var(Z) \; } }{\sqrt{N} }$$

This is useful to know: for a given large $N$, we know (on average) how far away we are from the estimate. On the other hand, in a Bayesian setting, this can seem like a useless result: Bayesian analysis is OK with uncertainty so what's the *statistical* point of adding extra precise digits? Though drawing samples can be so computationally cheap that having a *larger* $N$ is fine too.

### How do we compute $Var(Z)$ though?

The variance is simply another expected value that can be approximated! Consider the following, once we have the expected value (by using the Law of Large Numbers to estimate it, denote it $\mu$), we can estimate the variance:

$$ \frac{1}{N}\sum_{i=1}^N \;(Z_i - \mu)^2 \rightarrow E[ \;( Z - \mu)^2 \;] = Var( Z )$$

### Expected values and probabilities

There is an even less explicit relationship between expected value and estimating probabilities. Define the *indicator function*

$$\mathbb{1}_A(x) =

\begin{cases} 1 & x \in A \\\\

0 & else

\end{cases}

$$

Then, by the law of large numbers, if we have many samples $X_i$, we can estimate the probability of an event $A$, denoted $P(A)$, by:

$$ \frac{1}{N} \sum_{i=1}^N \mathbb{1}_A(X_i) \rightarrow E[\mathbb{1}_A(X)] = P(A) $$

Again, this is fairly obvious after a moments thought: the indicator function is only 1 if the event occurs, so we are summing only the times the event occurs and dividing by the total number of trials (consider how we usually approximate probabilities using frequencies). For example, suppose we wish to estimate the probability that a $Z \sim Exp(.5)$ is greater than 5, and we have many samples from a $Exp(.5)$ distribution.

$$ P( Z > 5 ) = \sum_{i=1}^N \mathbb{1}_{z > 5 }(Z_i) $$

```

N = 10000

print( np.mean( [ np.random.exponential( 0.5 ) > 5 for i in range(N) ] ) )

```

### What does this all have to do with Bayesian statistics?

*Point estimates*, to be introduced in the next chapter, in Bayesian inference are computed using expected values. In more analytical Bayesian inference, we would have been required to evaluate complicated expected values represented as multi-dimensional integrals. No longer. If we can sample from the posterior distribution directly, we simply need to evaluate averages. Much easier. If accuracy is a priority, plots like the ones above show how fast you are converging. And if further accuracy is desired, just take more samples from the posterior.

When is enough enough? When can you stop drawing samples from the posterior? That is the practitioners decision, and also dependent on the variance of the samples (recall from above a high variance means the average will converge slower).

We also should understand when the Law of Large Numbers fails. As the name implies, and comparing the graphs above for small $N$, the Law is only true for large sample sizes. Without this, the asymptotic result is not reliable. Knowing in what situations the Law fails can give us *confidence in how unconfident we should be*. The next section deals with this issue.

## The Disorder of Small Numbers

The Law of Large Numbers is only valid as $N$ gets *infinitely* large: never truly attainable. While the law is a powerful tool, it is foolhardy to apply it liberally. Our next example illustrates this.

##### Example: Aggregated geographic data

Often data comes in aggregated form. For instance, data may be grouped by state, county, or city level. Of course, the population numbers vary per geographic area. If the data is an average of some characteristic of each the geographic areas, we must be conscious of the Law of Large Numbers and how it can *fail* for areas with small populations.

We will observe this on a toy dataset. Suppose there are five thousand counties in our dataset. Furthermore, population number in each state are uniformly distributed between 100 and 1500. The way the population numbers are generated is irrelevant to the discussion, so we do not justify this. We are interested in measuring the average height of individuals per county. Unbeknownst to us, height does **not** vary across county, and each individual, regardless of the county he or she is currently living in, has the same distribution of what their height may be:

$$ \text{height} \sim \text{Normal}(150, 15 ) $$

We aggregate the individuals at the county level, so we only have data for the *average in the county*. What might our dataset look like?

```

figsize( 12.5, 4)

std_height = 15

mean_height = 150

n_counties = 5000

pop_generator = np.random.randint

norm = np.random.normal

#generate some artificial population numbers

population = pop_generator(100, 1500, n_counties )

average_across_county = np.zeros( n_counties )

for i in range( n_counties ):

#generate some individuals and take the mean

average_across_county[i] = norm(mean_height, 1./std_height,

population[i] ).mean()

#located the counties with the apparently most extreme average heights.

i_min = np.argmin( average_across_county )

i_max = np.argmax( average_across_county )

#plot population size vs. recorded average

plt.scatter( population, average_across_county, alpha = 0.5, c="#7A68A6")

plt.scatter( [ population[i_min], population[i_max] ],

[average_across_county[i_min], average_across_county[i_max] ],

s = 60, marker = "o", facecolors = "none",

edgecolors = "#A60628", linewidths = 1.5,

label="extreme heights")

plt.xlim( 100, 1500 )

plt.title( "Average height vs. County Population")

plt.xlabel("County Population")

plt.ylabel("Average height in county")

plt.plot( [100, 1500], [150, 150], color = "k", label = "true expected \

height", ls="--" )

plt.legend(scatterpoints = 1);

```

What do we observe? *Without accounting for population sizes* we run the risk of making an enormous inference error: if we ignored population size, we would say that the county with the shortest and tallest individuals have been correctly circled. But this inference is wrong for the following reason. These two counties do *not* necessarily have the most extreme heights. The error results from the calculated average of smaller populations not being a good reflection of the true expected value of the population (which in truth should be $\mu =150$). The sample size/population size/$N$, whatever you wish to call it, is simply too small to invoke the Law of Large Numbers effectively.

We provide more damning evidence against this inference. Recall the population numbers were uniformly distributed over 100 to 1500. Our intuition should tell us that the counties with the most extreme population heights should also be uniformly spread over 100 to 4000, and certainly independent of the county's population. Not so. Below are the population sizes of the counties with the most extreme heights.

```

print("Population sizes of 10 'shortest' counties: ")

print(population[ np.argsort( average_across_county )[:10] ], '\n')

print("Population sizes of 10 'tallest' counties: ")

print(population[ np.argsort( -average_across_county )[:10] ])

```

Not at all uniform over 100 to 1500. This is an absolute failure of the Law of Large Numbers.

##### Example: Kaggle's *U.S. Census Return Rate Challenge*

Below is data from the 2010 US census, which partitions populations beyond counties to the level of block groups (which are aggregates of city blocks or equivalents). The dataset is from a Kaggle machine learning competition some colleagues and I participated in. The objective was to predict the census letter mail-back rate of a group block, measured between 0 and 100, using census variables (median income, number of females in the block-group, number of trailer parks, average number of children etc.). Below we plot the census mail-back rate versus block group population:

```

figsize( 12.5, 6.5 )

data = np.genfromtxt( "./data/census_data.csv", skip_header=1,

delimiter= ",")

plt.scatter( data[:,1], data[:,0], alpha = 0.5, c="#7A68A6")

plt.title("Census mail-back rate vs Population")

plt.ylabel("Mail-back rate")

plt.xlabel("population of block-group")

plt.xlim(-100, 15e3 )

plt.ylim( -5, 105)

i_min = np.argmin( data[:,0] )

i_max = np.argmax( data[:,0] )

plt.scatter( [ data[i_min,1], data[i_max, 1] ],

[ data[i_min,0], data[i_max,0] ],

s = 60, marker = "o", facecolors = "none",

edgecolors = "#A60628", linewidths = 1.5,

label="most extreme points")

plt.legend(scatterpoints = 1);

```

The above is a classic phenomenon in statistics. I say *classic* referring to the "shape" of the scatter plot above. It follows a classic triangular form, that tightens as we increase the sample size (as the Law of Large Numbers becomes more exact).

I am perhaps overstressing the point and maybe I should have titled the book *"You don't have big data problems!"*, but here again is an example of the trouble with *small datasets*, not big ones. Simply, small datasets cannot be processed using the Law of Large Numbers. Compare with applying the Law without hassle to big datasets (ex. big data). I mentioned earlier that paradoxically big data prediction problems are solved by relatively simple algorithms. The paradox is partially resolved by understanding that the Law of Large Numbers creates solutions that are *stable*, i.e. adding or subtracting a few data points will not affect the solution much. On the other hand, adding or removing data points to a small dataset can create very different results.

For further reading on the hidden dangers of the Law of Large Numbers, I would highly recommend the excellent manuscript [The Most Dangerous Equation](http://nsm.uh.edu/~dgraur/niv/TheMostDangerousEquation.pdf).

##### Example: How to order Reddit submissions

You may have disagreed with the original statement that the Law of Large numbers is known to everyone, but only implicitly in our subconscious decision making. Consider ratings on online products: how often do you trust an average 5-star rating if there is only 1 reviewer? 2 reviewers? 3 reviewers? We implicitly understand that with such few reviewers that the average rating is **not** a good reflection of the true value of the product.

This has created flaws in how we sort items, and more generally, how we compare items. Many people have realized that sorting online search results by their rating, whether the objects be books, videos, or online comments, return poor results. Often the seemingly top videos or comments have perfect ratings only from a few enthusiastic fans, and truly more quality videos or comments are hidden in later pages with *falsely-substandard* ratings of around 4.8. How can we correct this?

Consider the popular site Reddit (I purposefully did not link to the website as you would never come back). The site hosts links to stories or images, called submissions, for people to comment on. Redditors can vote up or down on each submission (called upvotes and downvotes). Reddit, by default, will sort submissions to a given subreddit by Hot, that is, the submissions that have the most upvotes recently.

<img src="http://i.imgur.com/3v6bz9f.png" />

How would you determine which submissions are the best? There are a number of ways to achieve this:

1. *Popularity*: A submission is considered good if it has many upvotes. A problem with this model is that a submission with hundreds of upvotes, but thousands of downvotes. While being very *popular*, the submission is likely more controversial than best.

2. *Difference*: Using the *difference* of upvotes and downvotes. This solves the above problem, but fails when we consider the temporal nature of submission. Depending on when a submission is posted, the website may be experiencing high or low traffic. The difference method will bias the *Top* submissions to be the those made during high traffic periods, which have accumulated more upvotes than submissions that were not so graced, but are not necessarily the best.

3. *Time adjusted*: Consider using Difference divided by the age of the submission. This creates a *rate*, something like *difference per second*, or *per minute*. An immediate counter-example is, if we use per second, a 1 second old submission with 1 upvote would be better than a 100 second old submission with 99 upvotes. One can avoid this by only considering at least t second old submission. But what is a good t value? Does this mean no submission younger than t is good? We end up comparing unstable quantities with stable quantities (young vs. old submissions).

3. *Ratio*: Rank submissions by the ratio of upvotes to total number of votes (upvotes plus downvotes). This solves the temporal issue, such that new submissions who score well can be considered Top just as likely as older submissions, provided they have many upvotes to total votes. The problem here is that a submission with a single upvote (ratio = 1.0) will beat a submission with 999 upvotes and 1 downvote (ratio = 0.999), but clearly the latter submission is *more likely* to be better.

I used the phrase *more likely* for good reason. It is possible that the former submission, with a single upvote, is in fact a better submission than the later with 999 upvotes. The hesitation to agree with this is because we have not seen the other 999 potential votes the former submission might get. Perhaps it will achieve an additional 999 upvotes and 0 downvotes and be considered better than the latter, though not likely.

What we really want is an estimate of the *true upvote ratio*. Note that the true upvote ratio is not the same as the observed upvote ratio: the true upvote ratio is hidden, and we only observe upvotes vs. downvotes (one can think of the true upvote ratio as "what is the underlying probability someone gives this submission a upvote, versus a downvote"). So the 999 upvote/1 downvote submission probably has a true upvote ratio close to 1, which we can assert with confidence thanks to the Law of Large Numbers, but on the other hand we are much less certain about the true upvote ratio of the submission with only a single upvote. Sounds like a Bayesian problem to me.

One way to determine a prior on the upvote ratio is to look at the historical distribution of upvote ratios. This can be accomplished by scraping Reddit's submissions and determining a distribution. There are a few problems with this technique though:

1. Skewed data: The vast majority of submissions have very few votes, hence there will be many submissions with ratios near the extremes (see the "triangular plot" in the above Kaggle dataset), effectively skewing our distribution to the extremes. One could try to only use submissions with votes greater than some threshold. Again, problems are encountered. There is a tradeoff between number of submissions available to use and a higher threshold with associated ratio precision.